I build with a strong focus on privacy

(offline/local models), inclusivity

(Hindi-English bilingualism), and

edge-efficiency (optimized pipelines with low

latency). My stack includes tools like

LLaMA via Ollama,

YOLOv8,

DeepFace,

FAISS, and native Android

development using

Jetpack Compose.

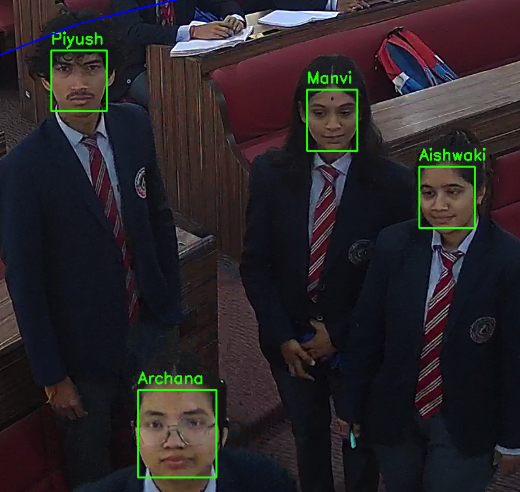

Over the last few years, I’ve worked across diverse AI domains — from face recognition and posture correction to building multilingual AI assistants and personalized recommendation engines. Many of my projects originate from everyday problems I encounter as a student or developer — whether it's automating classroom attendance, understanding human behavior through Reddit activity, or making offline AI tools accessible in Hindi and English.

While most of my work is open-source and individually developed, I’ve also collaborated in hackathons and team projects, often winning or placing at national-level events. I value hands-on, end-to-end execution — from data pipelines and model training to deployment and optimization. Some systems, like DeepTrack and CollegeBot, required integrating multiple complex modules, including local LLMs, YOLO-based object detection, TensorFlow models, and speech recognition—all working together in a resource-constrained setting.

I approach AI from a builder's mindset, but I’m also drawn to the ethics, generalization, and long-term impacts of deploying such systems at scale. A recurring question in my mind is: Can we make AI systems that are both useful and contextually aware, without requiring massive cloud infrastructure or exposing private data?

Some of my other public projects include:

- YogAI: Posture correction for yoga sessions using webcam-based pose classification.

- RedditUserPersona: Behavioral analysis and persona generation using local LLMs + Reddit scraping.

- BonelossDetector: A dental AI model for identifying alveolar bone loss in X-rays using YOLOv8.

- NextBest: Dual-mode recommendation engine (movies + anime) powered by content-based filtering.

- FaultXpert: Micro-fault detection system for physical systems with real-time alerts.

My work continues to evolve, and I’m currently experimenting with lightweight RAG-based tools, emotion-aware models, and agents that can reason with voice, text, and image inputs — all while remaining fully offline or edge-deployable.